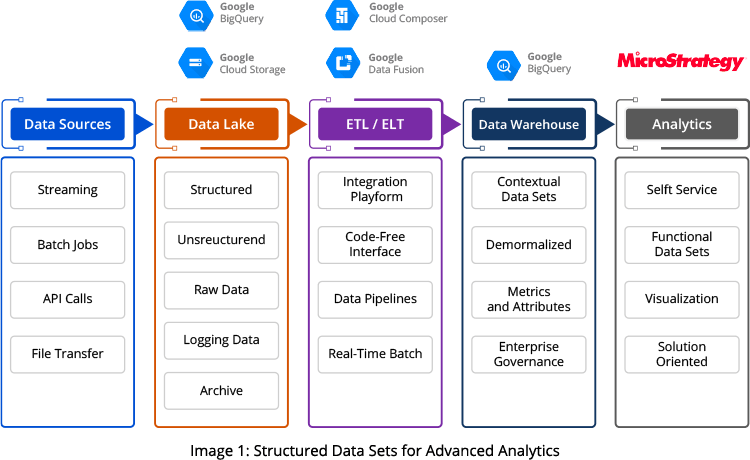

After carefully analyzing the company's goals and current infrastructure, our Information Analytics team used a combination of tools, including Google BigQuery, Google Cloud Services, Apache Airflow, and Cloud Composer, to provide a viable solution. This solution allowed the company to source, load, and transform the data extracted from a data lake into meaningful data sets suitable for advanced analytics.

The source data is first loaded into Google Cloud Storage (GCS) buckets and a BigQuery data lake from Kafka jobs. From GCS and data lake, data undergoes an ETL/ELT (extract transform load/extract load transfer) process in Google Data Fusion before being transferred into BigQuery. At this point, the correlated data is enriched and transformed in Google BigQuery and is available for the company's various analytical and reporting needs.

Key benefits of our solution:

- Captures data aligned with business goals: The new system captures and transfers data from different sources per business requirements with 85% reduced latency and 99% improved data accuracy.

- Process massive data volumes using comprehensive custom tools: Google BigQuery provides comprehensive tools designed to operate on a vast amount of data and improve query performance through its aggregate data partitioning and clustering features.

- Get near real-time actionable data: As part of the solution, our team migrated the company's API and fraud-checking jobs to BigQuery. The move reduced large file processing issues that previously occurred every week, month, and quarter. We also configured the scheduled jobs as BigQuery scripts that scan a minimal data subset at customized intervals.